RAG or Fine-Tuning? Which Is Best For Generative AI

Nov 28, 2023

AI is a developing industry that is being rapidly adopted for various use cases as time progresses. The past decade has seen rapid improvements, especially in the field of Natural Language Processing. A quick summary of the improvements in different time frames can be seen below:

Language Modelling (1951)

Embeddings (2003)

Transformers (2017)

LLM models like BERT (Post 2019)

The most recent armada of Generative AI models is the talk of the town, especially after the release of GPT3.5 by Open AI. These models can single-handedly be used for a variety of purposes, such as email generation, text summarization, etc. Generative AI models combined with user prompts act as the origination of chat completion models. The user, without any prior knowledge of computer programming in general, can still interact with chat completion models in plain human language, such as already happening in ChatGPT and LLaMa.

Sounds interesting, right? Join the line as most of the firms feel the same way. The swift adoption of such models by various firms is backed by the result of a recent Gartner webinar poll of more than 2,500 executives. Results indicate that 38% chose customer experience and retention as the primary purpose of their generative AI investments. This was followed by revenue growth (26%), cost optimization (17%) and business continuity (7%).

Advantages and Disadvantages for sellers

Let’s analyze the Gen AI benefits from the seller's perspective

Advantages for sellers are discussed below →

Easy and effective generation of content like email replies

Accurate and personalized tending to customer needs

In-depth explainability of various pros and cons of a product while pitching its sale

Disadvantages and primary blocker for companies to adopt Generative AI

Data Privacy Issue: There are chances of propriety data leaks, specifically data sent along with the prompt being at the highest risk.

Hallucination: The answers are not always reliable and can change over time for the exact same question.

Company Challenges Facing Generative AI

1. Risk of Market Disruption by AI: Companies need to consider the potential for generative AI to disrupt their market. Key areas to evaluate include:

Creative Sectors: The advent of generative AI in fields ike advertising, design, gaming, media, and entertainment could render traditional methods obsolete.

Document-Intensive Processes: In sectors such as legal, insurance, and HR, generative AI could revolutionize how documents are processed.

2. Opportunities for Revenue Growth: Ignoring generative AI might lead to missed opportunities in several domains:

Customer Interaction: Enhanced AI-driven chat and call center solutions could significantly improve customer support.

Search and Recommendation Systems: These could become more sophisticated and accurate with generative AI.

Productivity Tools: AI could bring advancements in automated note-taking, summarization, and information aggregation, enhancing overall productivity.

3. Leveraging Existing Assets for AI Opportunities: Companies should evaluate their inherent strengths that could give them an edge in adopting AI technologies. This might include:

Access to Proprietary Data: Unique datasets can provide a competitive advantage.

Established User Base: A pre-existing customer base can facilitate easier integration and adoption of new AI-driven services.

4. Deciding Between Building or Buying: A crucial decision is whether to develop in-house AI solutions or partner with external vendors. This decision should be guided by the specific needs and context of the business.

Generative AI Challenges

Outdated information: Models involved are trained on older datasets; in the current fast-paced world, an increasing amount of datasets is very common.

Hallucination: Results can be unreliable and un-reproducible as well.

Task-Specific Poor Performance: The training data being generic makes it difficult to perform exceptionally well in all individual tasks.

Bias: Various early-day examples portray gender bias in the model-generated text. This, in turn, leads to compliance issues.

The above shortcomings can be improved with training, but training an LLM from scratch is very costly. A good example is the training cost of GPT4, which is said to have taken about $100 million.

Another way to overcome these shortcomings is by applying RAG and fine-tuning. Let’s discuss the benefits and drawbacks of each method.

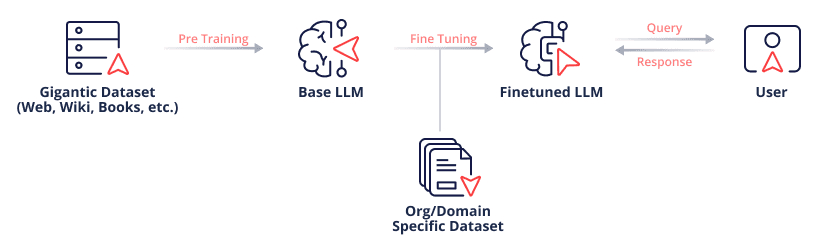

Fine-Tuning and its Challenges

Fine-tuning refers to further training of pre-trained LLM’s weights. This is achievable with the help of large datasets, which tune the model weights in such a manner that the models become more suited to the task depicted by the dataset used. Common examples of different tasks include credit card fraud detection, email spam detection, etc.

Fine-Tuning Workflow

As even a coin has two sides, fine-tuning has its own disadvantages, which include the following:

The data needed for training needs to be refined to avoid noise, which is expensive to obtain.

Regular fine-tuning is costly; moreover, frequent updation of data is difficult

We need large machines to tune the model, which is costly.

Possibilities of data privacy issues popping up as some data might be stored in weights of the model parameters

RAG overcomes some of these limitations. Let’s see its advantages and disadvantages.

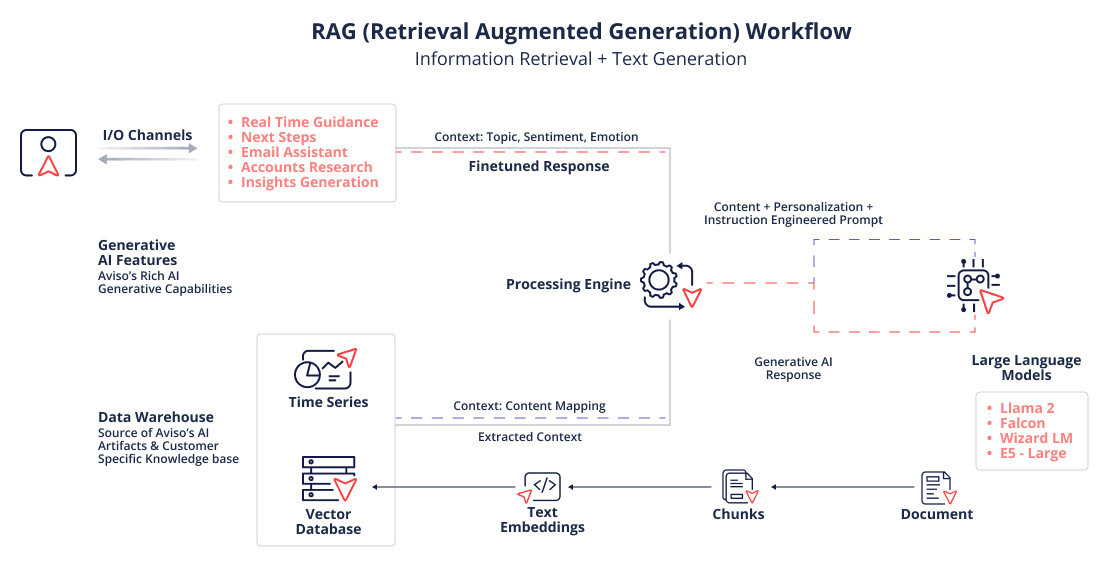

RAG (Retrieval Augmentation Generation)

RAG is a method made of two terms: Retrieval & Generation.

Retrieval: As discussed before, LLMs are aware of fixed data; any factual information required has to be fed first, and hence, selecting an appropriate chunk of data from a huge data source becomes the first step. It helps to make the answer more specific and up-to-date.

Generation: The second step is to generate the answer using the selected documents and pre-trained weights of the model.

Let’s understand by breaking down the RAG process for the following user query:

What are the positive developments, as discussed in the latest earnings call of Aviso?

The above question cannot be answered by any LLM due to a lack of awareness with regard to the latest data. RAG plays a crucial role in such cases.

Retrieval: Fetching the most relevant document to this question can be done using cosine similarity on documents stored in vectorized forms in vector db.

Generation: Once relevant documents are fetched, we can simply feed those and rely on LLMs to respond meaningfully (because of the integration of relevant context).

The biggest benefit of this approach is no data dependency/ data refresh.

Still, the following challenges are expected to be faced with RAG:

In case the relevant documents are not present in the first place or the wrong relevant documents are fetched, the expectancy of a meaningful answer drops sharply, mainly because LLMs start hallucinating in such scenarios.

The cost of inference is high for RAG, but overall, compared to regular fine-tuning, it is cheaper.

Finding the relevant breakpoint before storing large documents in any format is still a challenging task, as there is no deterministic way to do so. One can rely on chunking the documents into lines, paragraphs or even pages.

RAG Applications to Improve Sales Productivity

Used to make an efficient Chatbot, which saves time for sellers and helps answer their questions in real time. This also helps in the following

Helping sellers choose appropriate products for buyers, especially when a company has a very large diversity of products

Preventing them from remembering niche-level information. An example of this would be a company providing translation is asked how many languages and what they are. Sales reps can directly answer these questions easily instead of memorizing them.

Composing email in real-time using the latest data from the database. For example, after the meeting, the seller wants to send what is discussed along with a few latest product recommendations and its features. This is where RAG is helpful.

RAG and Fine Tuning Together

Then what is better to use? RAG or fine-tuning?

It is like a personal litmus test. It depends on our use case, as both have their own advantages and disadvantages. The best method is to use both. To use a metaphor, a doctor needs specialty training (fine-tuning) and access to a patient’s medical chart (RAG) to make a diagnosis.

RAG helps in keeping the data dynamic and keeping updated with the latest data. Fine-tuning helps to keep addressing the challenges that are slow to change, making inference faster. Using it along with fine tuning also helps to choose when to use external documents and when not to some extent.

Aviso at Generative AI

As per the Gartner report, by 2024, 40% of enterprise applications will have embedded conversational AI, up from less than 5% in 2020. So don’t be left behind and use it proudly in your companies to get ahead of your competitors and provide better services to customers.

Aviso is one such company that uses the latest models and technologies in the industry to help sellers in acquiring deals successfully. Some of the features where Aviso’s generative AI is used to help sellers with their sales pitch are:

Generating an overall buyer score and telling them the chances of buyer buying the product.

Sentiment score, which tells us the sentiment of the buyer

Identifying buyers’ sentiment towards products helps buyers to pitch the product accordingly.

Generating an email on the fly as per the use case.

Book a demo now and get to know how it can be of help to you.